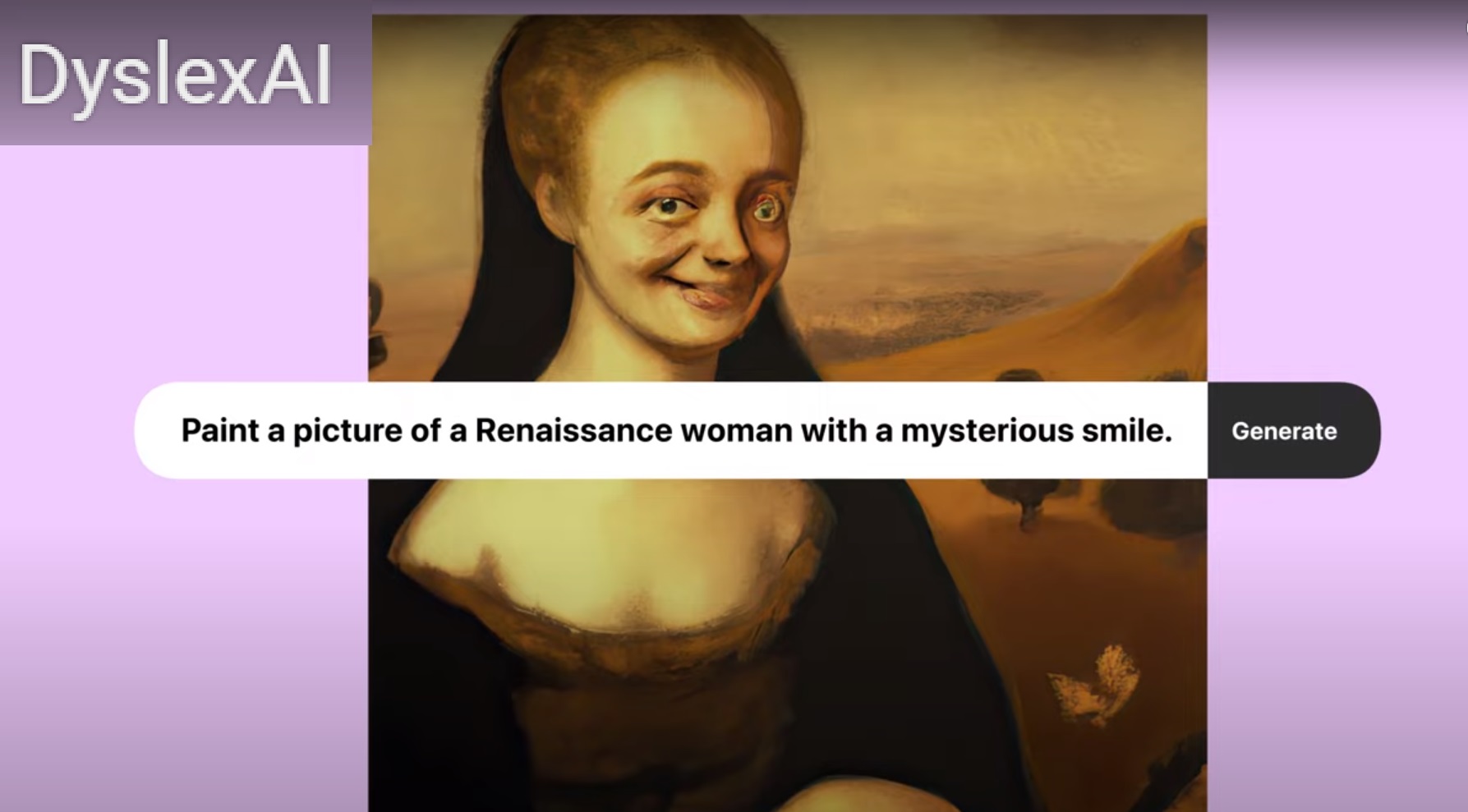

The rise of AI is truly remarkable. It is transforming the way we work, live, and interact with each other, and with so many other touchpoints of our lives. However, while AI aggregates, dyslexic thinking skills innovate. If used in the right way, AI could be the perfect co-pilot for dyslexics to really move the world forward. In light of this, Virgin and Made By Dyslexia have launched a brilliant campaign to show what is possible if AI and dyslexic thinking come together. The film below says it all.

As the film shows, AI can’t replace the soft skills that index high in dyslexics – such as innovating, lateral thinking, complex problem solving, and communicating.

If you ask AI for advice on how to scale a brand that has a record company – it offers valuable insights, but the solution lacks creative instinct and spontaneous decision making. If I hadn’t relied on my intuition, lateral thinking and willingness to take a risk, I would have never jumped from scaling a record company to launching an airline – which was a move that scaled Virgin into the brand it is today.

Together, dyslexic thinkers and AI are an unstoppable force, so it’s great to see that 72% of dyslexics see AI tools (like ChatGPT) as a vital starting point for their projects and ideas – according to new research by Made By Dyslexia and Randstad Enterprise. With help from AI, dyslexics have limitless power to change the world, but we need everyone to welcome our dyslexic minds. If businesses fail to do this, they risk being left behind. As the Value of Dyslexia report highlighted, dyslexic skillsets will mirror the World Economic Forum’s future skills needs by end of this year (2025). Given the speed at which technology and AI have progressed, this cross-over has arrived two years earlier than predicted.

Image: Sarah Rogers/MITTR

With all of this in mind, it’s concerning to see a big difference between how HR departments think they understand and support dyslexia in the workplace, versus the experience of dyslexic people themselves.

The new research also shows that 66% of HR professionals believe they have support structures in place for dyslexia, yet only 16% of dyslexics feel supported in the workplace. It’s even sadder to see that only 14% of dyslexic employees believe their workplace understands the value of dyslexic thinking. There is clearly work to be done here.

To empower dyslexic thinking in the workplace (which has the two-fold benefit of bringing out the best in your people and in your business), you need to understand dyslexic thinking skills. To help with this, Made By Dyslexia is launching a workplace training course later this year on LinkedIn Learning – and you can sign up for it now. The course will be free to access, and I’m delighted that Virgin companies from all across the world have signed up for it – from Virgin Australia, to Virgin Active Singapore, to Virgin Plus Canada and Virgin Voyages. It’s such an insightful course, designed by experts at Made By Dyslexia to educate people on how to understand, support, and empower dyslexic thinking in the workplace, and make sure businesses are ready for the future.

It’s always inspiring to see how Made By Dyslexia empowers dyslexics, and shows the world the limitless power of dyslexic thinking. If businesses can harness this power, and if dyslexics can harness the power of AI – we can really drive the future forward. Richard Branson, Founder at Virgin Group.

Congratulations on your work, Olu!

Congratulations on your work, Olu!